Cultural Intelligence and bias in AI models

There’s something almost nobody is talking about in AI - but it affects everything from asking ChatGPT for advice to companies deploying AI globally.

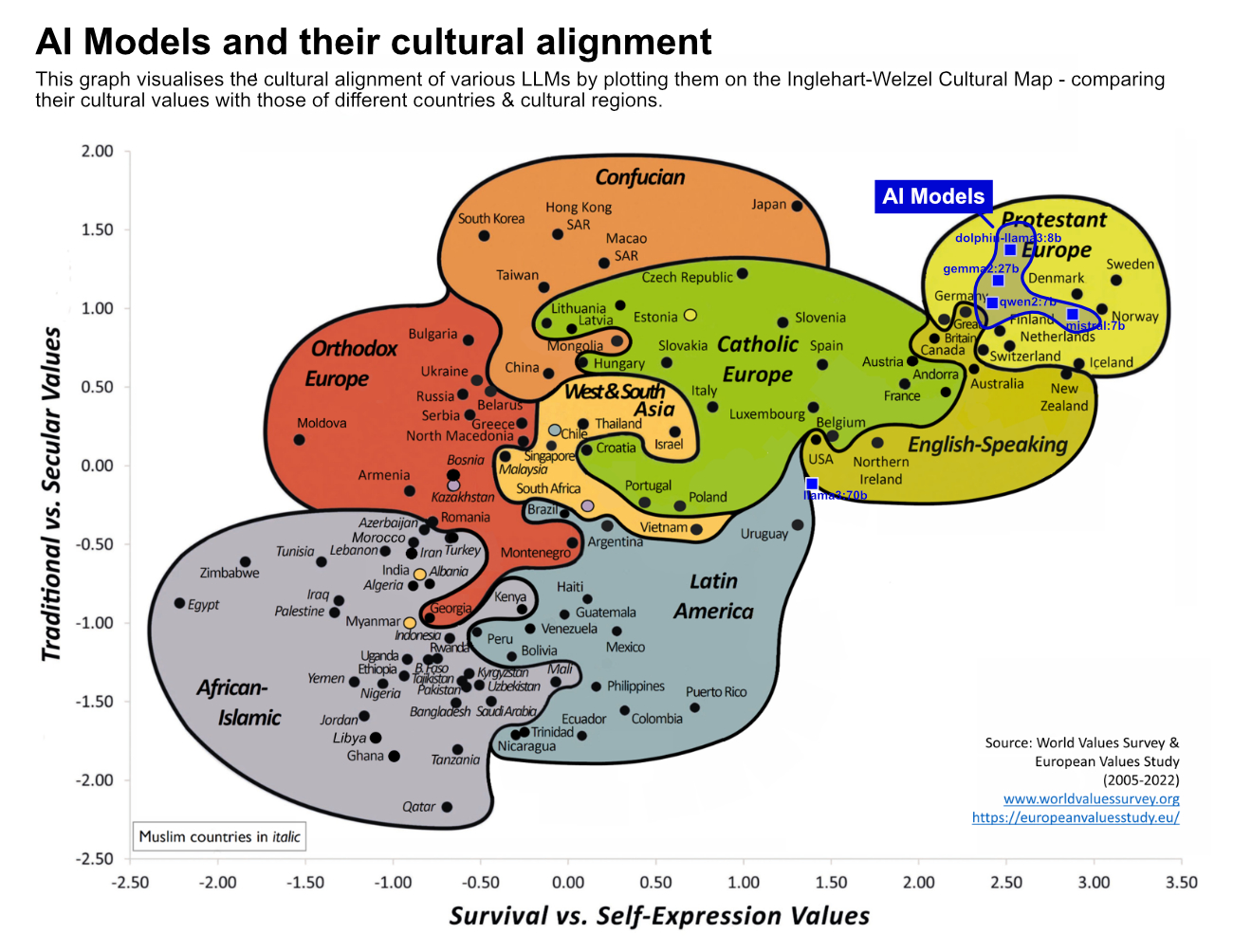

A fascinating study tested major AI models - the foundations powering tools millions use daily - against cultural values from 107 countries worldwide.

The result? Each one reflected the same assumptions - those of English-speaking, Western European societies. None aligned with how people in Africa, Latin America, or the Middle East actually build trust, show respect, or resolve conflicts.

Why does this matter? Imagine you’re a global company rolling out AI customer service. Your system learns “best practice”: when customers complain about late orders, “apologise briefly, offer a discount, and focus on quick resolution”.

In Germany, the direct, efficient approach works perfectly. Customer satisfied.

But in Japan, that brief apology violates meiwaku - the cultural need to deeply acknowledge when you’ve caused someone inconvenience. Your “efficient” response feels dismissive and damages customer relationships.

And in the UAE, the discount offer backfires completely. It feels like charity rather than respect.

One AI system, similar contexts, completely different cultural outcomes.

This isn’t intentional though - it’s inevitable. LLMs absorb embedded patterns about communication from their training data, and most of that data comes from billions of English web pages and content. The result? AI systems that, unless thoughtfully shaped, are blind to the diversity of human interaction.

Klarna, the global payments company, made headlines in 2024 when they introduced an AI system that “did the work of 700 customer service reps”, handled 2.5 million conversations in 35 languages, and cut response time by 82%. Technical triumph.

14 months later: “Klarna reverses AI strategy and is hiring humans again”. Their CEO admitted it had led to “lower quality”. Some reports said they’d seen a 20%+ decrease in customer satisfaction.

What I think really happened: Klarna optimised for 35 languages while completely missing 35 different ways humans expect to be treated.

The challenge? Most companies are focusing on technical integration and completely missing cultural intelligence. We measure response time and cost savings, but never ask, “which human complexities are we overlooking?”

The goal isn’t neutrality though - that’s impossible and undesirable. It’s conscious awareness. Understanding that the output from AI models is filtered through a specific cultural lens.

For companies building AI strategies, key questions worth asking:

- Which cultural assumptions are embedded in our AI systems?

- How do we test cultural intelligence alongside technical performance?

- Who provides this expertise in our AI teams?

The individuals and organisations that develop this conscious awareness will make better decisions, while others unknowingly apply one-size-fits-all approaches to beautifully diverse human contexts.

-

This post was adapted from my original LinkedIn post published in August 2025.